kubeadm部署多master节点高可用k8s集群

环境说明

- kubernetes版本:1.25.5

- docker版本: 19.03.9

- cri-docker版本:0.3.6

- containerd版本:1.7.8

- kubeadm版本:1.25.5

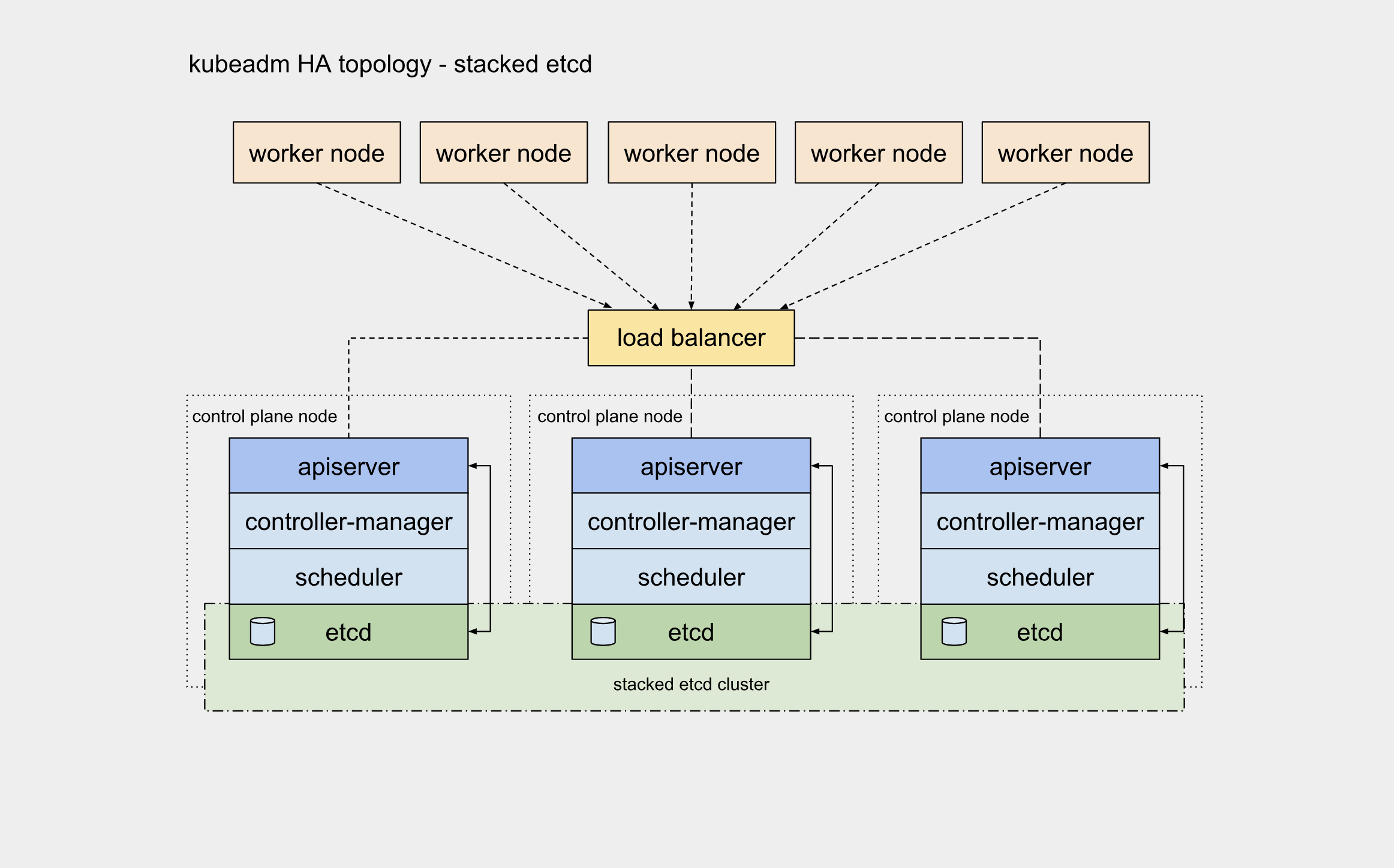

kubernets的信息是保存在etcd中,etcd采用多数决定,所以如果需要冗余,至少3台etc服务器(从成本考虑采用单数,比如5台和6台效果相同,均只允许2台服务器宕机) k8s多master高可用架构图

准备了6台虚拟机ip为192.168.8.190-196分别对应主机名k8s01-k8s08, 其中k8s01,k8s02,k8s03为master,其余为woker节点,系统均为centos7.6 前端采用Nginx(192.168.200.190)作为代理服务器,代理6443/TCP端口 (本文未采用主备,如需主备通过haproxy+keepalived vip实现就可以)

| 主机名 | IP | 角色 |

|---|---|---|

| k8s01.k8s.local | 192.168.8.191 | master |

| k8s02.k8s.local | 192.168.8.192 | master |

| k8s03.k8s.local | 192.168.8.193 | master |

| k8s04.k8s.local | 192.168.8.194 | node |

| k8s05.k8s.local | 192.168.8.195 | node |

| master-lb | 192.168.8.190 | nginx组件监听地址 |

说明:

- master节点为3台实现高可用,并且通过envoy进行代理master流量实现高可用,master也安装node组件

- node节点为2台

- 系统使用centos7.X

基础环境配置

所有节点配置hosts

[root@k8s01 ~]# cat >>/etc/hosts<<EOF

192.168.8.191 k8s01 k8s01.k8s.local

192.168.8.192 k8s02 k8s02.k8s.local

192.168.8.193 k8s03 k8s03.k8s.local

192.168.8.194 k8s04 k8s04.k8s.local

192.168.8.195 k8s05 k8s05.k8s.local

192.168.8.196 k8s05 k8s06.k8s.local

EOF

所有节点关闭防火墙、selinux、dnsmasq、swap

#关闭防火墙

[root@k8s01 ~]# systemctl disable --now firewalld

#关闭dnsmasq

[root@k8s01 ~]# systemctl disable --now dnsmasq

#关闭postfix

[root@k8s01 ~]# systemctl disable --now postfix

#关闭NetworkManager

[root@k8s01 ~]# systemctl disable --now NetworkManager

#关闭selinux

[root@k8s01 ~]# sed -ri 's/(^SELINUX=).*/\1disabled/' /etc/selinux/config

[root@k8s01 ~]# setenforce 0

#关闭swap

[root@k8s01 ~]# sed -ri 's@(^.*swap *swap.*0 0$)@#\1@' /etc/fstab

[root@k8s01 ~]# swapoff -a

所有节点修改资源限制

[root@k8s01 ~]# cat > /etc/security/limits.conf <<EOF

* soft core unlimited

* hard core unlimited

* soft nproc 1000000

* hard nproc 1000000

* soft nofile 1000000

* hard nofile 1000000

* soft memlock 32000

* hard memlock 32000

* soft msgqueue 8192000

EOF

ssh认证

[root@k8s01 ~]# yum install -y sshpas

sssh-keygen -f /root/.ssh/id_rsa -P ''

export IP="192.168.8.191 192.168.8.192 192.168.8.193 192.168.8.194 192.168.8.195 192.168.8.196"

export SSHPASS=123456

for HOST in $IP;do

sshpass -e ssh-copy-id -o StrictHostKeyChecking=no $HOST

done

升级系统以及内核

修改内核参数

cat >/etc/sysctl.conf<<EOF

net.ipv4.tcp_keepalive_time=600

net.ipv4.tcp_keepalive_intvl=30

net.ipv4.tcp_keepalive_probes=10

net.ipv6.conf.all.disable_ipv6=1

net.ipv6.conf.default.disable_ipv6=1

net.ipv6.conf.lo.disable_ipv6=1

net.ipv4.neigh.default.gc_stale_time=120

net.ipv4.conf.all.rp_filter=0 # 默认为1,系统会严格校验数据包的反向路径,可能导致丢包

net.ipv4.conf.default.rp_filter=0

net.ipv4.conf.default.arp_announce=2

net.ipv4.conf.lo.arp_announce=2

net.ipv4.conf.all.arp_announce=2

net.ipv4.ip_local_port_range= 45001 65000

net.ipv4.ip_forward=1

net.ipv4.tcp_max_tw_buckets=6000

net.ipv4.tcp_syncookies=1

net.ipv4.tcp_synack_retries=2

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

net.netfilter.nf_conntrack_max=2310720

net.ipv6.neigh.default.gc_thresh1=8192

net.ipv6.neigh.default.gc_thresh2=32768

net.ipv6.neigh.default.gc_thresh3=65536

net.core.netdev_max_backlog=16384 # 每CPU网络设备积压队列长度

net.core.rmem_max = 16777216 # 所有协议类型读写的缓存区大小

net.core.wmem_max = 16777216

net.ipv4.tcp_max_syn_backlog = 8096 # 第一个积压队列长度

net.core.somaxconn = 32768 # 第二个积压队列长度

fs.inotify.max_user_instances=8192 # 表示每一个real user ID可创建的inotify instatnces的数量上限,默认128.

fs.inotify.max_user_watches=524288 # 同一用户同时可以添加的watch数目,默认8192。

fs.file-max=52706963

fs.nr_open=52706963

kernel.pid_max = 4194303

net.bridge.bridge-nf-call-arptables=1

vm.swappiness=0 # 禁止使用 swap 空间,只有当系统 OOM 时才允许使用它

vm.overcommit_memory=1 # 不检查物理内存是否够用

vm.panic_on_oom=0 # 开启 OOM

vm.max_map_count = 262144

EOF

加载ipvs模块

cat >/etc/modules-load.d/ipvs.conf <<EOF

ip_vs

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

EOF

systemctl enable --now systemd-modules-load.service

#重启

reboot

#重启服务器执行检查

lsmod | grep -e ip_vs -e nf_conntrack

安装基础软件

#安装基础软件

[root@k8s01 ~]# yum install curl conntrack ipvsadm ipset iptables jq sysstat libseccomp rsync wget jq psmisc vim net-tools telnet -y

优化journald日志

[root@k8s01 ~]# mkdir -p /var/log/journal

[root@k8s01 ~]# mkdir -p /etc/systemd/journald.conf.d

[root@k8s01 ~]# cat >/etc/systemd/journald.conf.d/99-prophet.conf <<EOF

[Journal]

# 持久化保存到磁盘

Storage=persistent

# 压缩历史日志

Compress=yes

SyncIntervalSec=5m

RateLimitInterval=30s

RateLimitBurst=1000

# 最大占用空间 1G

SystemMaxUse=1G

# 单日志文件最大 10M

SystemMaxFileSize=10M

# 日志保存时间 2 周

MaxRetentionSec=2week

# 不将日志转发到 syslog

ForwardToSyslog=no

EOF

[root@k8s01 ~]# systemctl restart systemd-journald && systemctl enable systemd-journald

配置kubernetes的yum源

[root@k8s01 ~]# cat > /etc/yum.repos.d/kubernetes.repo <<EOF

[kubernetes]

name=kubernetes

baseurl=https://mirrors.cloud.tencent.com/kubernetes/yum/repos/kubernetes-el7-x86_64

gpgcheck=0

EOF

#测试

[root@k8s01 ~]# yum list --showduplicates | grep kubeadm

部署容器运行时

docker-ce与containerd选择一个安装即可,需要在所有运行kubelet的节点都需要安装,在kubernetes1.24版本之后如果使用docker-ce作为容器运行时,需要额外安装cri-docker

安装docker

1.24.+版本如果使用docker作为容器运行时,需要额外安装cri-docker插件 传送门

#所有节点安装

[root@k8s01 ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@k8s01 ~]# yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

[root@k8s01 ~]# yum list docker-ce --showduplicates | sort -r

[root@k8s01 ~]# yum install container-selinux -y

[root@k8s01 ~]# yum install docker-ce-3:23.0.0-1.el7.x86_64 docker-ce-cli-1:23.0.0-1.el7.x86_64 -y

[root@k8s01 ~]# systemctl enable --now docker

#验证

[root@k8s01 ~]# docker info

#配置docker,温馨提示:由于新版kubelet建议使用systemd,所以可以把docker的CgroupDriver改成systemd

[root@k8s01 ~]# cat >/etc/docker/daemon.json <<EOF

{

"registry-mirrors": [

"https://docker.m.daocloud.io",

"https://hub-mirror.c.163.com"

],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m",

"max-file": "10"

},

"storage-opts":["overlay2.override_kernel_check=true"],

"live-restore": true

}

EOF

[root@k8s01 ~]# systemctl restart docker

#验证

[root@k8s01 ~]# docker info

...

Cgroup Driver: systemd

...

安装cri-docker,需要先下载rpm包

[root@k8s01 ~]# wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.6/cri-dockerd-0.3.6.20231018204925.877dc6a4-0.el7.x86_64.rpm

[root@k8s01 ~]# rpm -ivh cri-dockerd-0.3.6.20231018204925.877dc6a4-0.el7.x86_64.rpm

#修改配置

[root@k8s01 ~]# vim /usr/lib/systemd/system/cri-docker.service

...

[Service]

Type=notify

ExecStart=/usr/bin/cri-dockerd --container-runtime-endpoint fd:// --network-plugin=cni --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.8

...

#启动

[root@k8s01 ~]# systemctl enable --now cri-docker.socket

[root@k8s01 ~]# systemctl enable --now cri-docker.service

#验证

[root@k8s01 ~]# systemctl status cri-docker

安装containerd

在安装containerd前需要优先升级libseccomp在centos7中yum下载libseccomp的版本是2.3的,版本不满足我们最新containerd的需求,需要下载2.4以上的

[root@k8s01 ~]# rpm -qa | grep libseccomp

libseccomp-devel-2.3.1-4.el7.x86_64

libseccomp-2.3.1-4.el7.x86_64

[root@k8s01 ~]# rpm -e libseccomp-devel-2.3.1-4.el7.x86_64 --nodeps

[root@k8s01 ~]# rpm -e libseccomp-2.3.1-4.el7.x86_64 --nodeps

#下载高于2.4以上的包

[root@k8s01 ~]# wget http://rpmfind.net/linux/centos/8-stream/BaseOS/x86_64/os/Packages/libseccomp-2.5.1-1.el8.x86_64.rpm

#安装

[root@k8s01 ~]# rpm -ivh libseccomp-2.5.1-1.el8.x86_64.rpm

warning: libseccomp-2.5.1-1.el8.x86_64.rpm: Header V3 RSA/SHA256 Signature, key ID 8483c65d: NOKEY

Preparing... ################################# [100%]

Updating / installing...

1:libseccomp-2.5.1-1.el8 ################################# [100%]

#查看当前版本

[root@k8s01 ~]# rpm -qa | grep libseccomp

libseccomp-2.5.1-1.el8.x86_64

下载到cri的二进制包,如果超过1.5.5版本需要额外下载runc,官方在包中的runc采用动态链接centos7系统没有这些库会导致容器无法正常运行,containerd-1.7.8-linux-amd64.tar.gz 只包含containerd

cri-containerd-cni-1.7.8-linux-amd64.tar.gz 包含containerd以及cri runc等相关工具包,建议下载本包

[root@k8s01 ~]# wget https://github.com/containerd/containerd/releases/download/v1.7.8/cri-containerd-cni-1.7.8-linux-amd64.tar.gz

[root@k8s01 ~]# tar xf cri-containerd-cni-1.7.8-linux-amd64.tar.gz -C /

#创建服务启动文件

[root@k8s01 ~]# cat > /etc/systemd/system/containerd.service <<EOF

[Unit]

Description=containerd container runtime

Documentation=https://containerd.io

After=network.target local-fs.target

[Service]

ExecStartPre=-/sbin/modprobe overlay

ExecStart=/usr/local/bin/containerd

Type=notify

Delegate=yes

KillMode=process

Restart=always

RestartSec=5

LimitNPROC=infinity

LimitCORE=infinity

LimitNOFILE=infinity

TasksMax=infinity

OOMScoreAdjust=-999

[Install]

WantedBy=multi-user.target

EOF

#启动

[root@k8s01 ~]# systemctl enable --now containerd.service

#创建配置文件

[root@k8s01 ~]# mkdir /etc/containerd

[root@k8s01 ~]# /usr/local/bin/containerd config default > /etc/containerd/config.toml

#修改配置

[root@k8s01 ~]# sed -i 's/k8s.gcr.io/registry.aliyuncs.com\/google_containers/' /etc/containerd/config.toml

[root@k8s01 ~]# sed -i 's/SystemdCgroup \= false/SystemdCgroup \= true/' /etc/containerd/config.toml

#重启

[root@k8s01 ~]# systemctl restart containerd

安装高可用组件nginx

#解压

[root@nginx ~]# tar xf nginx.tar.gz -C /usr/bin/

#生成配置文件

[root@nginx ~]# mkdir /etc/nginx -p

[root@nginx ~]# mkdir /var/log/nginx -p

[root@nginx ~]# cat >/etc/nginx/nginx.conf<<EOF

user root;

worker_processes 1;

error_log /var/log/nginx/error.log warn;

pid /var/log/nginx/nginx.pid;

events {

worker_connections 3000;

}

stream {

upstream apiservers {

server 192.168.8.191:6443 max_fails=2 fail_timeout=3s;

server 192.168.8.192:6443 max_fails=2 fail_timeout=3s;

server 192.168.8.193:6443 max_fails=2 fail_timeout=3s;

}

server {

listen 6443;

proxy_connect_timeout 1s;

proxy_pass apiservers;

}

}

EOF

#生成启动文件

[root@nginx ~]# cat >/etc/systemd/system/nginx.service <<EOF

[Unit]

Description=nginx proxy

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=forking

ExecStartPre=/usr/bin/nginx -c /etc/nginx/nginx.conf -p /etc/nginx -t

ExecStart=/usr/bin/nginx -c /etc/nginx/nginx.conf -p /etc/nginx

ExecReload=/usr/bin/nginx -c /etc/nginx/nginx.conf -p /etc/nginx -s reload

PrivateTmp=true

Restart=always

RestartSec=15

StartLimitInterval=0

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

#启动

[root@nginx ~]# systemctl enable --now nginx.service

#验证

[root@nginx ~]# ss -ntl | grep 6443

LISTEN 0 511 0.0.0.0:6443 *:*

安装kubernetes组件安装

#所有节点安装kubeadm

[root@k8s01 ~]# yum list kubeadm.x86_64 --showduplicates | sort -r #查看所有版本

#安装1.25.5

[root@k8s01 ~]# yum install kubeadm-1.25.5-0 kubelet-1.25.5-0 kubectl-1.25.5-0 -y

#设置kubelet

[root@k8s01 ~]# DOCKER_CGROUPS=$(docker info | grep 'Cgroup' | cut -d' ' -f4)

[root@k8s01 ~]# cat >/etc/sysconfig/kubelet<<EOF

KUBELET_EXTRA_ARGS="--cgroup-driver=$DOCKER_CGROUPS"

EOF

#重启kubelet

[root@k8s01 ~]# systemctl daemon-reload && systemctl restart kubelet && systemctl enable kubelet

k8s组件安装

初始化配置文件

#生成kubeadm文件

[root@k8s01 ~]# cat >kubeadm-config.yaml<<EOF

apiVersion: kubeadm.k8s.io/v1beta3

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.8.191

bindPort: 6443

nodeRegistration:

criSocket: unix:///var/run/cri-dockerd.sock #容器运行时不同,这里需修改

imagePullPolicy: IfNotPresent

name: k8s01.ecdigit.com

taints: null

---

apiServer:

certSANs: #手动添加此行内容

- 127.0.0.1 #指定证书颁发服务器地址

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta3

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: 192.168.8.190:6443

controllerManager: {}

dns: {}

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: 1.25.5

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16

serviceSubnet: 10.96.0.0/16

scheduler: {}

EOF

初始化k8s集群

在一个master节点执行即可

[root@k8s01 ~]# kubeadm init --config kubeadm-config.yaml --upload-certs

#出现以下信息表示正常

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 192.168.8.190:6443 --token 46uf0z.ff0hirzur8m219u5 \

--discovery-token-ca-cert-hash sha256:f9d573576a51756c97f5d5a30f8b0d69340ec07f750131232d80c4dfcaa3bb82 \

--control-plane --certificate-key ea2d459317eb0136274caa3d262d12439fcc3a90d58a67fec8bb1dba773f15a4

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.8.190:6443 --token 46uf0z.ff0hirzur8m219u5 \

--discovery-token-ca-cert-hash sha256:f9d573576a51756c97f5d5a30f8b0d69340ec07f750131232d80c4dfcaa3bb82

初始化master

[root@k8s02 ~]# kubeadm join 192.168.8.190:6443 --token eo4ss8.1g4eqkvztlcve6qr \

--discovery-token-ca-cert-hash sha256:59b45d6cd9d1da04db50f687620ba19c1f67e04ad4484f8553f9b3b656560e49 \

--control-plane --certificate-key ba787e9ecc748023e8011b91a081b62283fadfc15582ff11b0a5c5f92f6c552f \

--cri-socket unix:///var/run/cri-dockerd.sock

初始化node节点

[root@k8s04 ~]# kubeadm join 192.168.8.190:6443 --token eo4ss8.1g4eqkvztlcve6qr \

--discovery-token-ca-cert-hash sha256:59b45d6cd9d1da04db50f687620ba19c1f67e04ad4484f8553f9b3b656560e49 \

--cri-socket unix:///var/run/cri-dockerd.sock

节点设置

[root@k8s01 ~]# kubectl label nodes k8s01.k8s.com node-role.kubernetes.io/master=

[root@k8s01 ~]# kubectl label nodes k8s02.k8s.com node-role.kubernetes.io/master=

[root@k8s01 ~]# kubectl label nodes k8s03.k8s.com node-role.kubernetes.io/master=

[root@k8s01 ~]# kubectl label nodes k8s04.k8s.com node-role.kubernetes.io/worker=

[root@k8s01 ~]# kubectl label nodes k8s05.k8s.com node-role.kubernetes.io/worker=

[root@k8s01 ~]# kubectl label nodes k8s06.k8s.com node-role.kubernetes.io/worker=

# 设置master参与调度

[root@k8s01 ~]# kubectl label nodes k8s03.k8s.com node-role.kubernetes.io/control-plane-

[root@k8s01 ~]# kubectl label nodes k8s02.k8s.com node-role.kubernetes.io/control-plane-

[root@k8s01 ~]# kubectl label nodes k8s01.k8s.com node-role.kubernetes.io/control-plane-

其他组件安装

calico网络组件安装

下载yaml文件

#下载yaml文件

[root@k8s01 ~]# wget https://docs.tigera.io/archive/v3.21/manifests/calico-etcd.yaml

配置连接etcd

如果使用https,还需要配置证书(ConfigMap,Secret)

[root@k8s01 ~]# cat /etc/kubernetes/pki/etcd/ca.crt | base64 -w 0

LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSUM5VENDQWQyZ0F3SUJBZ0lCQURBTkJna3Foa2lHOXcwQkFRc0ZBREFTTVJBd0RnWURWUVFERXdkbGRHTmsKTFdOaE1CNFhEVEkwTURReE9URTJOVE13TjFvWERUTTBNRFF4TnpFMk5UTXdOMW93RWpFUU1BNEdBMVVFQXhNSApaWFJqWkMxallUQ0NBU0l3RFFZSktvWklodmNOQVFFQkJRQURnZ0VQQURDQ0FRb0NnZ0VCQUxFVGtDSHV3Tmp6Cm9MUU9HZGlJb1VNQ1Z0WEZROVQxSVFMS3dJY3VrNi82Wnd1RzVnSlRCT1ZEdUpDUUJPekVyZVRnQnBXUXRRcEIKN3Z2RllMa2dZdXRCWUpSRklPNit3Y2taMGNKSzZWSHhSRHhDNU5sRU8yVytxSjF1Ry92RlJjWVp0MUhzSmdWUwpCYVVZc1BIcmVyczJTdkZra3NTb1lLdnVPOWtRb3QvcGJ2VDZYLzdSdUhUNnM0UE9WT2VQSGkxYWRoZzduZDNtCkhHUWdjTVFaQk9uSCsxYkV1L2NGbHJ2RTBvTnExbUoxWXNXbVVYR29DKzNZL1BwaCs0UGJRR25uU05LQXVxa3AKK0dPUmZpcHZ6UmdHWXExVUhZdHNwaFFjdVFCN2gra0psaDREZjljRTUvUSttMUczTkJ0dzhmWjVnUmhtcTRLcQpoa0VBU3Z3bVZaVUNBd0VBQWFOV01GUXdEZ1lEVlIwUEFRSC9CQVFEQWdLa01BOEdBMVVkRXdFQi93UUZNQU1CCkFmOHdIUVlEVlIwT0JCWUVGS1ZKY1N2bHQ3WERXbkd6VzRjbW9YYUNBeSt3TUJJR0ExVWRFUVFMTUFtQ0IyVjAKWTJRdFkyRXdEUVlKS29aSWh2Y05BUUVMQlFBRGdnRUJBR3lwWjBnU1JHSVZQNUNZWklSa1BhSmNiZ0xsVmpEVQpLN0JOSEpPVVpmblhtVzdQbitnMTZvTUw3K0lMcjFjRk85MGdJMGQvYytQZDkwbTh6Tjk5bEhCeG9JNmxZUU9yCk9TVHRzOW10NmtZSGF6cUVzQzZ5emN4cEgxRnQxRW5HOHJwSGN6VTFuVklVTWdxS0lGOGRwQW5LV3hGd1pqUTUKUngwMEM3eStHeFVxS0wvL0IvS3Q2NlpZMG1YcGFyamJLUUhjaTVLQUgwanpsTjFRVVN4R3FBYjlUOHpiK0o1Lwo0bXJacjJuVm8zTW5aVWs2MElIOFZYZ3VRQ0RiZTY3Q1BOdzIwZlJnM3VlRWJSci9rdmJJVnFBSzNCVXhMbWViCmxqUU5XM1huM3hpeVpBc0x1MDFUVnFIcTgwenNsR1BuZHBCcGExRThxRzRCL0NPclFDeVZXaWs9Ci0tLS0tRU5EIENFUlRJRklDQVRFLS0tLS0K

[root@k8s01 ~]# cat /etc/kubernetes/pki/etcd/server.crt | base64 -w 0

LS0tLS1CRUdJTiBDRVJUSUZJQ0FURS0tLS0tCk1JSURXVENDQWtHZ0F3SUJBZ0lJYVBXeWpHMVlESFF3RFFZSktvWklodmNOQVFFTEJRQXdFakVRTUE0R0ExVUUKQXhNSFpYUmpaQzFqWVRBZUZ3MHlOREEwTVRreE5qVXpNRGRhRncweU5UQTBNVGt4TmpVek1EZGFNQnd4R2pBWQpCZ05WQkFNVEVXczRjekF4TG1WalpHbG5hWFF1WTI5dE1JSUJJakFOQmdrcWhraUc5dzBCQVFFRkFBT0NBUThBCk1JSUJDZ0tDQVFFQXNuUXgvb0JBOUU0UTZpRDh0cnplZkJ6dWJnN1M0aVdWR1Nyamlvb1NtNzA2SjBTdUgzd2EKOWhhVEVxR3FHK2pBbjI3M0xRZUROMW1UZXNNZnYweTNkSG4vK1U3bmlTR1pibEZVNUhmOE1tU2FOcDQrL2tFSgpseXNMbGpwbFpjQitnR29IWERpVG50TE1SczNST3hwWnhGTDRiNGtmVllYMlJzR3FMTFRTVk44N1ZEZGErcDJtCm5RWUZpV3BWSlFBMEc3UDlEczV2NzFDcDZWRU1qelRUV1dEVnZYb1ZPVndZV1VueGhwTnJMbTlkMFBSZ1hHd0cKUHg3eFFkaThtM09KaFY1ZWQ1S0JzL2dlQnNsWlEyRlhPcnZzbEtHTE9obWUzWXlGSE8wTkpCWlBjZUxDVURPdwpIQ0p4b20xc1lDcXJDanZRLzJ0bnpYeHVNT3RReTVQVHR3SURBUUFCbzRHb01JR2xNQTRHQTFVZER3RUIvd1FFCkF3SUZvREFkQmdOVkhTVUVGakFVQmdnckJnRUZCUWNEQVFZSUt3WUJCUVVIQXdJd0RBWURWUjBUQVFIL0JBSXcKQURBZkJnTlZIU01FR0RBV2dCU2xTWEVyNWJlMXcxcHhzMXVISnFGMmdnTXZzREJGQmdOVkhSRUVQakE4Z2hGcgpPSE13TVM1bFkyUnBaMmwwTG1OdmJZSUpiRzlqWVd4b2IzTjBod1RBcUFpL2h3Ui9BQUFCaHhBQUFBQUFBQUFBCkFBQUFBQUFBQUFBQk1BMEdDU3FHU0liM0RRRUJDd1VBQTRJQkFRQS9tMjNYTEFXOXFnSEV3UjRQblZ0MTRVeEYKazVHbnJ2TFROU2ozOWx6NkpGTy9MMW9Qd0gwRTBabjcrWEVQb1hhRDlsNlRqMXp5OGdOdG40ZTlickp3MG1rQwp4N1VYOUFQWk90ZFQ4Y1JCQldqc2luOWdaV0R4cmZkZG5KVC9lRGJKMUp5U3J6ZUlzeS9keElLems2ZlNNQVh3Ck1TWGozTEQ4WHZvaVBUVUw5eFdYMkthNStydmNaQ1c5RXhhYjlUOWFiSlhMOElBSFRUcHgwUVBkeHVYZ2VzNzYKclYveGpwbmF0OWVoYjBqa3RGMkwyTXdVcE8zb3hlbXhsOHhjdXJhakxveXpqT29YbFFyNjdwTXJIc3dlUkgySwpaVEFIYUd2R1M2TEpHclNQcThZWnFlWFQvZ0JoMFI4cTl0ZVJJRFNnTEJETWpSaWs0ZlJ1VDdnTXd2SXoKLS0tLS1FTkQgQ0VSVElGSUNBVEUtLS0tLQo=

[root@k8s01 ~]# cat /etc/kubernetes/pki/etcd/server.key | base64 -w 0

LS0tLS1CRUdJTiBSU0EgUFJJVkFURSBLRVktLS0tLQpNSUlFcFFJQkFBS0NBUUVBc25ReC9vQkE5RTRRNmlEOHRyemVmQnp1Ymc3UzRpV1ZHU3JqaW9vU203MDZKMFN1Ckgzd2E5aGFURXFHcUcrakFuMjczTFFlRE4xbVRlc01mdjB5M2RIbi8rVTduaVNHWmJsRlU1SGY4TW1TYU5wNCsKL2tFSmx5c0xsanBsWmNCK2dHb0hYRGlUbnRMTVJzM1JPeHBaeEZMNGI0a2ZWWVgyUnNHcUxMVFNWTjg3VkRkYQorcDJtblFZRmlXcFZKUUEwRzdQOURzNXY3MUNwNlZFTWp6VFRXV0RWdlhvVk9Wd1lXVW54aHBOckxtOWQwUFJnClhHd0dQeDd4UWRpOG0zT0poVjVlZDVLQnMvZ2VCc2xaUTJGWE9ydnNsS0dMT2htZTNZeUZITzBOSkJaUGNlTEMKVURPd0hDSnhvbTFzWUNxckNqdlEvMnRuelh4dU1PdFF5NVBUdHdJREFRQUJBb0lCQVFDV2pKSjB5OHlBanErVQpaZG50Ykc4aU0rSXVkVkVSdW5VTHMzUWRONWkxVExHWDJVZ3doYW5WUEh3OU5xZmxQYm5yaDFqOTR4MmUvM0g5CkdrcFpRQ1p4SEUrSUN6b29KWEZrY1RiMlBBZEdtRHJGbzBFdmpMekRqR3dOQkJ6dzk3R0JNbklkS2huWDNxeTUKZXY5dUppL2ZBY1JYakU0ai80MGlhbFhmdTNqS1lkUUhUSUcvQjM3ajNvdjJCRWNhQWR1OWZQM1czUWFTYkVuSApaT3M1UGtsRCtVSDVnWEhHTzFic1FFUWt2T3ZXemR4Ri9EMzMrazJQeUdCbHBKYUJ6elJldTdYVllKN0lNTFdICjBwQkthenB1NnI2UlROa1JnVWlPM0UzcGliTEVpNVdjaHlkZmpJL29ieklUMmljY3hzZDluTU42d2c3bjBwRkQKVHZreEFVT1pBb0dCQU9WdlVZWnJ6bDRJM0RoTVpYZGgrY2xuNXQxM043VGppL1dJNFliQmFSRjRxWURLVzdSQwptS2hmdldGc2JodGJBSGhMTldtdnlZUnlPUC8rVmE3Z054cVNBWm5NN1FZSEFkRldDU1g4TUo5dVp0T2pYbVpqCmEyWDhuTlZPUUtISlVhVXF1eHk3ODhTWmVnQzRqZ0FkdDkrdFc3QW85SnNjeUgxVXhNa0ROWFBOQW9HQkFNY2QKdisxWnBQcEVKK0JYQzFwR3VQUlgyZDV1aWJWZUEvcnR3OTVTMThMWlZCb1hhdWtKeDNsbllIMmRHT0xqTVBMVQpsUTVDZU5HV2ZsT2VZVWFJZFRRaUgxK2lodzEycHV1aVR3Y3VGQWVpNVZKZ0c1TzJUTUYvUkdOcUE3RmdUZWlkCk1LT2VCUTNOUVVFY2FrVHpDVHoxYlJFbDdoSWpyLzdhWVhQRU1LbVRBb0dCQUlGbEM2UjA3bmtvSkJMNE93MWUKUzBLa2Y1YU5ZdlI0bEhtUWppaXlzYS94emg3ZGdXU2pYbmN2TDU1ZlNhWFI1K3p2OVpxNlVqdUpNODVrUVN5VQpOVitkMHB0TDhLMUNqR3JrUzlxSlpWNjFJL2JlSHlJOWxkZDU1WFdEWVg0ZERSZktocWFJOWN6K0JjbWNUREdTCmtSM3NKbGF1Y20xOGlUSHQrZUdvRnFCTkFvR0FYd3F5TytiWU9xVWhxNUdnMTV4K1dCTSs2VTgxNkI1U0pBb3QKdmdjbytkYW1zRFdSVUY3Y21mUmdPRlV1TmM1ZjVPalhwUkRSZnFrbzcxemY3YnpneE51c3dnZGdFMnNaVGN3UgprTW5Md2NGZFdLNkxPengrOHJWK3ZUMHpPZzFQNHNKMUh3UWtRV3hCdzRSK3JSNmN3RDRkcTF2QmdXTUxkTWhrClQ4L0xzb1VDZ1lFQW1PSThSWE1uYVppeUF3SVkwRWFjTkNmcVVBMHJpT3N2MU91K2xzb0RZcjNQNCtmeE1NSFUKaVRQS1BOY2MweDczaGF0Z2JuZWh3bTkvUm1vcDhwY1pxMGFXZkl6MjlxMWI2SVk0RjNBRi9GTFdqQWV4N1ZiWApDNEFNQ0pRMWFTaUJHYkdCOGs2emg5RDZRa21QTndkM3NUZzNrRk5XWmtlZHM5K1p3czB1SWowPQotLS0tLUVORCBSU0EgUFJJVkFURSBLRVktLS0tLQo=

将上述base64加密的字符串修改至文件中声明:ca.crt对应etcd-ca、server.key对应etcd-key、server.crt对应etcd-cert;修改etcd证书的位置;修改etcd的连接地址(与api-server中配置/opt/kubernetes/cfg/kube-apiserver.conf中相同)

[root@k8s01 ~]# vim calico-etcd.yaml

...

apiVersion: v1

kind: Secret

type: Opaque

metadata:

name: calico-etcd-secrets

namespace: kube-system

data:

# Populate the following with etcd TLS configuration if desired, but leave blank if

# not using TLS for etcd.

# The keys below should be uncommented and the values populated with the base64

# encoded contents of each file that would be associated with the TLS data.

# Example command for encoding a file contents: cat <file> | base64 -w 0

etcd-key: 填写上面的加密字符串

etcd-cert: 填写上面的加密字符串

etcd-ca: 填写上面的加密字符串

...

kind: ConfigMap

apiVersion: v1

metadata:

name: calico-config

namespace: kube-system

data:

# Configure this with the location of your etcd cluster.

etcd_endpoints: "https://192.168.8.191:2379,https://192.168.8.192:2379,https://192.168.8.193:2379"

# If you're using TLS enabled etcd uncomment the following.

# You must also populate the Secret below with these files.

etcd_ca: "/calico-secrets/etcd-ca"

etcd_cert: "/calico-secrets/etcd-cert"

etcd_key: "/calico-secrets/etcd-key"

根据实际网络规划修改Pod CIDR(CALICO_IPV4POOL_CIDR)

[root@k8s01 ~]# vim calico-typha.yaml

...

- name: CALICO_IPV4POOL_CIDR

value: "10.244.0.0/16" #修改成pod的网段

...

选择工作模式(CALICO_IPV4POOL_IPIP),支持BGP,IPIP

[root@k8s01 ~]# vim calico-etcd.yaml

...

- name: CALICO_IPV4POOL_IPIP

value: "Always"

...

修改完后应用清单

#创建calico

[root@k8s01 ~]# kubectl apply -f calico-etcd.yaml

secret/calico-etcd-secrets created

configmap/calico-config created

clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created

clusterrole.rbac.authorization.k8s.io/calico-node created

clusterrolebinding.rbac.authorization.k8s.io/calico-node created

daemonset.apps/calico-node created

serviceaccount/calico-node created

deployment.apps/calico-kube-controllers created

serviceaccount/calico-kube-controllers created

安装calicoctl工具

[root@k8s01 ~]# wget -O /usr/local/bin/calicoctl https://github.com/projectcalico/calicoctl/releases/download/v3.21.6/calicoctl-linux-amd64

[root@k8s01 ~]# chmod +x /usr/local/bin/calicoctl

[root@k8s01 ~]# mv calicoctl /usr/bin/

# 验证

[root@k8s01 ~]# calicoctl node status

Calico process is running.

IPv4 BGP status

+---------------+-------------------+-------+------------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+---------------+-------------------+-------+------------+-------------+

| 192.168.8.192 | node-to-node mesh | up | 2024-04-19 | Established |

| 192.168.8.193 | node-to-node mesh | up | 2024-04-19 | Established |

| 192.168.8.194 | node-to-node mesh | up | 2024-04-19 | Established |

| 192.168.8.195 | node-to-node mesh | up | 08:50:54 | Established |

| 192.168.8.196 | node-to-node mesh | up | 08:50:56 | Established |

+---------------+-------------------+-------+------------+-------------+

IPv6 BGP status

No IPv6 peers found.

# 实际上使用calicoctl查看node状态就是调用系统查看的,与netstat效果一样

[root@k8s01 ~]# netstat -antp|grep bird

tcp 0 0 0.0.0.0:179 0.0.0.0:* LISTEN 17660/bird

tcp 0 0 192.168.8.191:179 192.168.8.196:60491 ESTABLISHED 17660/bird

tcp 0 0 192.168.8.191:179 192.168.8.192:53534 ESTABLISHED 17660/bird

tcp 0 0 192.168.8.191:179 192.168.8.194:61230 ESTABLISHED 17660/bird

tcp 0 0 192.168.8.191:179 192.168.8.195:58793 ESTABLISHED 17660/bird

tcp 0 0 192.168.8.191:179 192.168.8.193:54044 ESTABLISHED 17660/bird

要查看更多的信息,需要指定配置查看etcd中的数据创建配置文件

[root@k8s01 ~]# mkdir /etc/calico

[root@k8s01 ~]# vim /etc/calico/calicoctl.cfg

apiVersion: projectcalico.org/v3

kind: CalicoAPIConfig

metadata:

spec:

datastoreType: "etcdv3"

etcdEndpoints: "https://192.168.8.191:2379,https://192.168.8.192:2379,https://192.168.8.193:2379"

etcdKeyFile: "/etc/kubernetes/pki/etcd/server.key"

etcdCertFile: "/etc/kubernetes/pki/etcd/server.crt"

etcdCACertFile: "/etc/kubernetes/pki/etcd/ca.crt"

# 查看数据操作

[root@k8s01 ~]# calicoctl get node

NAME

k8s01.ecdigit.com

k8s02.ecdigit.com

k8s03.ecdigit.com

k8s04.ecdigit.com

k8s05.ecdigit.com

k8s06.ecdigit.com

# 查看IPAM的IP地址池

[root@k8s01 ~]# calicoctl get ippool

NAME CIDR SELECTOR

default-ipv4-ippool 10.244.0.0/16 all()

[root@k8s01 ~]# calicoctl get ippool -o wide

NAME CIDR NAT IPIPMODE VXLANMODE DISABLED SELECTOR

default-ipv4-ippool 10.244.0.0/16 true Always Never false all()

特别注意

如果之前部署了fannel网络组件,需要先卸载和删除flannel,在每个节点均需要操作

[root@k8s01 ~]# kubectl delete -f kube-flannel.yaml

[root@k8s01 ~]# ip link delete cni0

[root@k8s01 ~]# ip link delete flannel.1

[root@k8s01 ~]# ip route

default via 192.168.8.191 dev eth0

10.244.1.0/24 via 192.168.2.63 dev eth0

10.244.2.0/24 via 192.168.2.62 dev eth0

169.254.0.0/16 dev eth0 scope link metric 1002

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1

192.168.2.0/24 dev eth0 proto kernel scope link src 192.168.2.61

[root@k8s01 ~]# ip route del 10.244.1.0/24 via 192.168.2.63 dev eth0

[root@k8s01 ~]# ip route del 10.244.2.0/24 via 192.168.2.62 dev eth0

[root@k8s01 ~]# ip route

default via 192.168.8.191 dev eth0

169.254.0.0/16 dev eth0 scope link metric 1002

172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1

192.168.2.0/24 dev eth0 proto kernel scope link src 192.168.2.61

安装Metrics-server

#下载yaml文件

[root@k8s01 ~]# wget https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.6.1/components.yaml

#需要修改配置

- --cert-dir=/tmp

- --secure-port=4443

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --kubelet-use-node-status-port

- --kubelet-insecure-tls #添加这个

#部署

[root@k8s01 ~]# kubectl apply -f components.yaml

#验证

[root@k8s01 ~]# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s01.k8s.local 105m 5% 1316Mi 34%

k8s02.k8s.local 95m 4% 1097Mi 28%

k8s03.k8s.local 95m 4% 1105Mi 28%

k8s04.k8s.local 71m 1% 846Mi 22%

k8s05.k8s.local 78m 1% 803Mi 21%

安装dashboard

注意1.24版本的sa服务账户创建完成后不会在像以前那样自动创建服务令牌secret资源,需要自己手动创建

#下载yaml文件

[root@k8s01 ~]# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.6.0/aio/deploy/recommended.yaml

#修改yaml文件

[root@k8s01 ~]# vim recommended.yaml

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

type: NodePort #添加

ports:

- port: 443

targetPort: 8443

nodePort: 30001 #添加

selector:

k8s-app: kubernetes-dashboard

#创建

[root@k8s01 ~]# kubectl apply -f dashboard.yaml

#创建用户

[root@k8s01 ~]# cat >admin.yaml<<EOF

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: v1

kind: Secret

metadata:

name: admin-user

namespace: kubernetes-dashboard

annotations:

kubernetes.io/service-account.name: "admin-user"

type: kubernetes.io/service-account-token

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

EOF

[root@k8s01 ~]# kubectl apply -f admin.yaml

#获取用户token

[root@k8s01 ~]# kubectl describe secrets -n kubernetes-dashboard admin-user-token-fwd2n

修改kube-proxy工作模式为ipvs

将Kube-proxy改为ipvs模式,因为在初始化集群的时候注释了ipvs配置,所以需要自行修改一下:

#在控制节点修改configmap

[root@k8s01 ~]# kubectl edit cm -n kube-system kube-proxy

mode: "ipvs" #默认没有值是iptables工作模式

#更新kube-proxy的pod

[root@k8s01 ~]# kubectl patch daemonset kube-proxy -p "{\"spec\":{\"template\":{\"metadata\":{\"annotations\":{\"date\":\"`date +'%s'`\"}}}}}" -n kube-system

#验证工作模式

[root@k8s01 ~]# curl 127.0.0.1:10249/proxyMode

ipvs

微信

微信

支付宝

支付宝