k8s部署elk+kafka+filebeat

环境配置

| 服务 | 用途 | 版本 |

|---|---|---|

| elasticsearch | 存储+搜索 | 7.6.2 |

| filebeat | 日志收集 | 7.6.2 |

| logstash | 过滤筛选 | 7.3.1 |

| kibana | 图形化展示 | 7.6.2 |

| kafka | 消息中间件 | 2.4 |

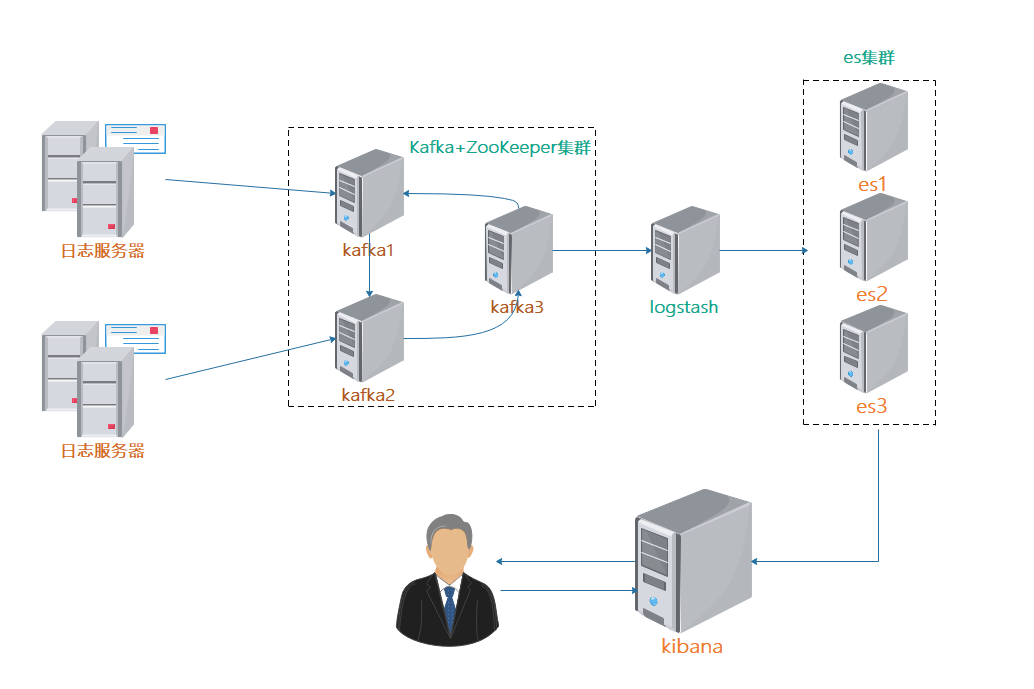

elk kafkafilebeat架构图:

filebeat部署

在日志服务器部署filebeat7.6.2

#下载

[root@elk ~]# wget https://repo.huaweicloud.com/filebeat/7.6.2/filebeat-7.6.2-x86_64.rpm

#安装filebeat

[root@elk ~]# rpm -ivh filebeat-7.6.2-x86_64.rpm

#修改配置文件(根据实际情况)

[root@elk ~]# cat /etc/filebeat/filebeat.yml

filebeat.inputs:

- type: log

enabled: true

paths:

- /var/log/test.log

fields:

level: info

log_source: ["time-log"]

#可以配置多个path

setup.template.settings:

index.number_of_shards: 50

close_older: 72h

ignore_older: 72h

scan_frequency: 3s

output.kafka:

enabled: true

#host为kafka集群地址

hosts: ["192.168.50.205:31090", "192.168.50.205:31091", "192.168.50.205:31092"]

topic: axhome

required_acks: 1

kafka Zookeeper集群部署

本文采用helm部署,之前博客也有介绍statefulset部署kafka集群

#添加helm仓库(该仓库中有kafka)

[root@k8s01 home]# helm repo add incubator http://storage.googleapis.com/kubernetes-charts-incubator

#下载kafka的chart

[root@k8s01 home]# helm fetch incubator/kafka

#下载成功后当前目录有个压缩包kafka-0.21.2.tgz

[root@k8s01 home]# tar xf kafka-0.21.2.tgz

#进入解压后的kafka目录,编辑values.yaml文件

[root@k8s01 home]# cd kafka

[root@k8s01 kafka]# ls

charts containers.conf README.md requirements.yaml values.yaml

Chart.yaml OWNERS requirements.lock templates

下面是需要修改的地方:

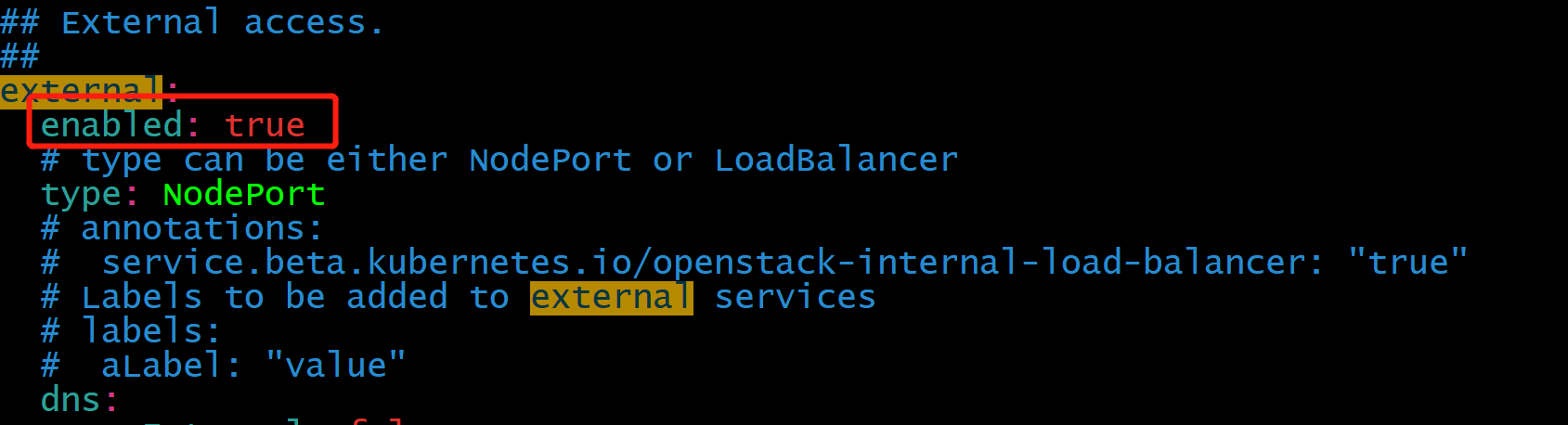

首先要设置在K8S之外的也能使用kafka服务,修改external.enabled的值,改为true

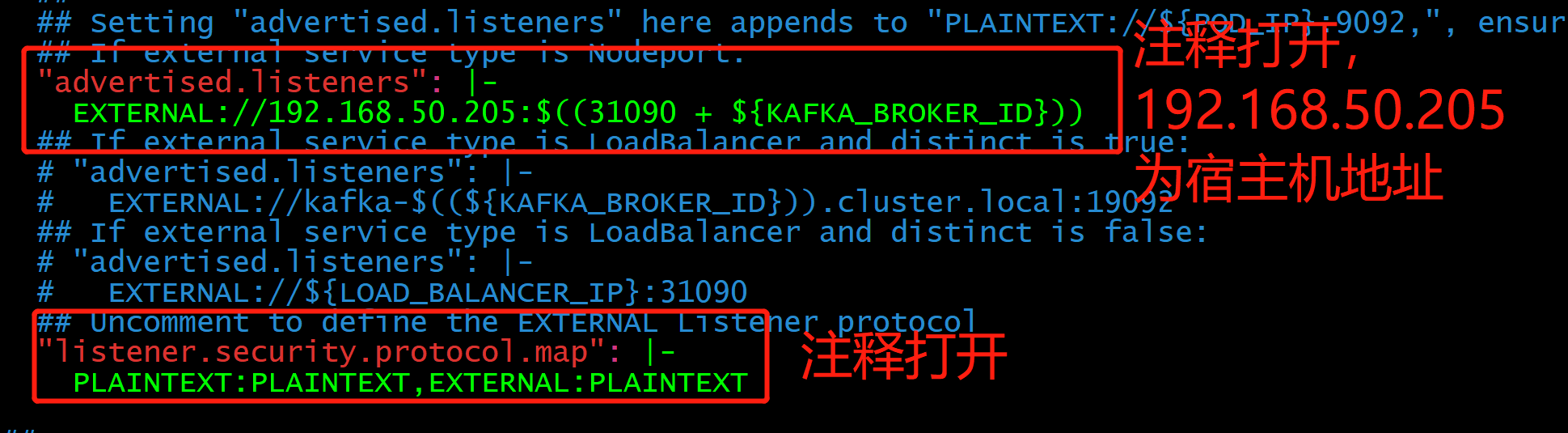

找到configurationOverrides,下图两个红框中的内容原本是注释的,请删除注释符号,如果之前设置过跨网络访问kafka,就能理解下面写入K8S宿主机IP的原因了

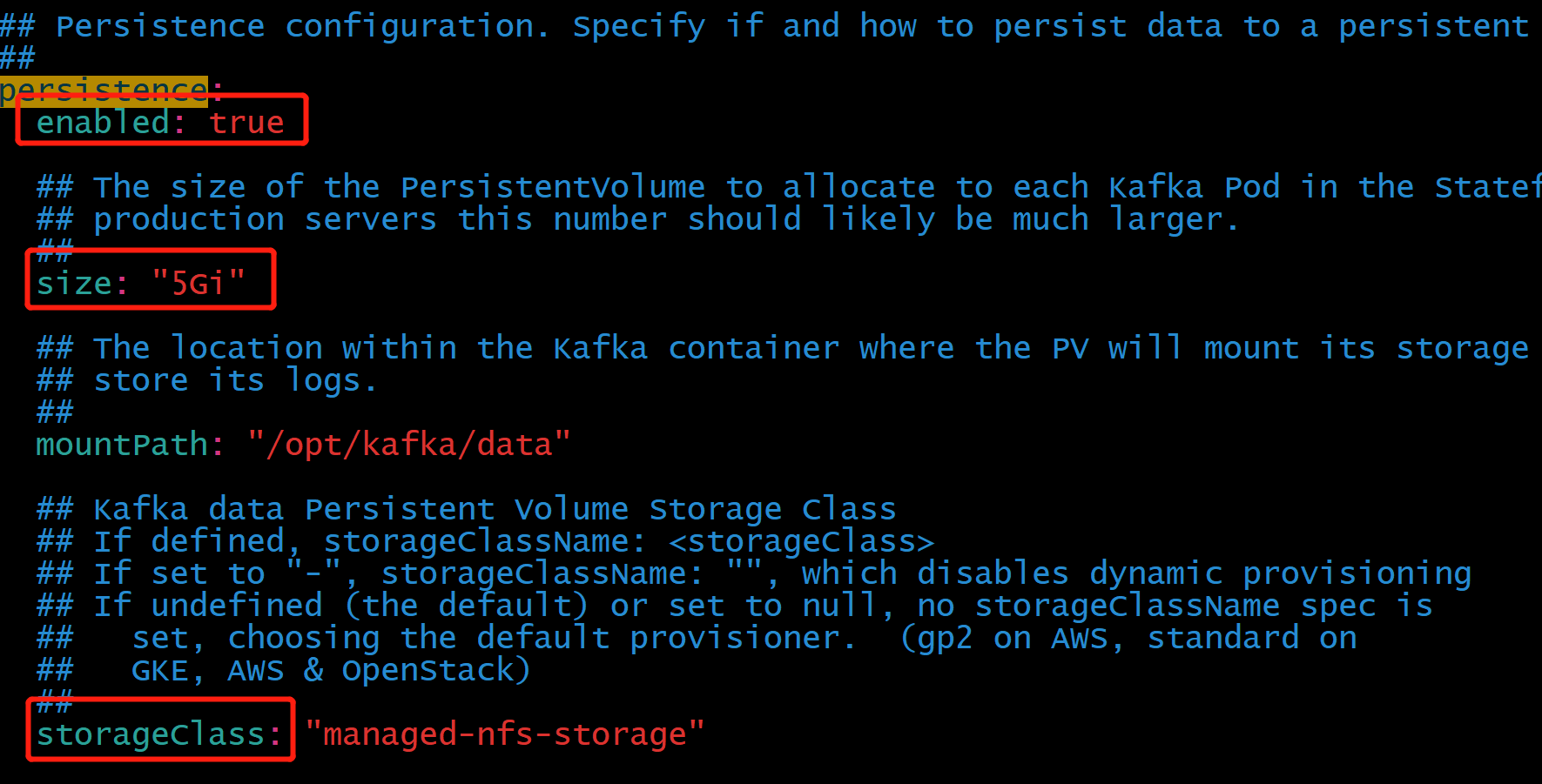

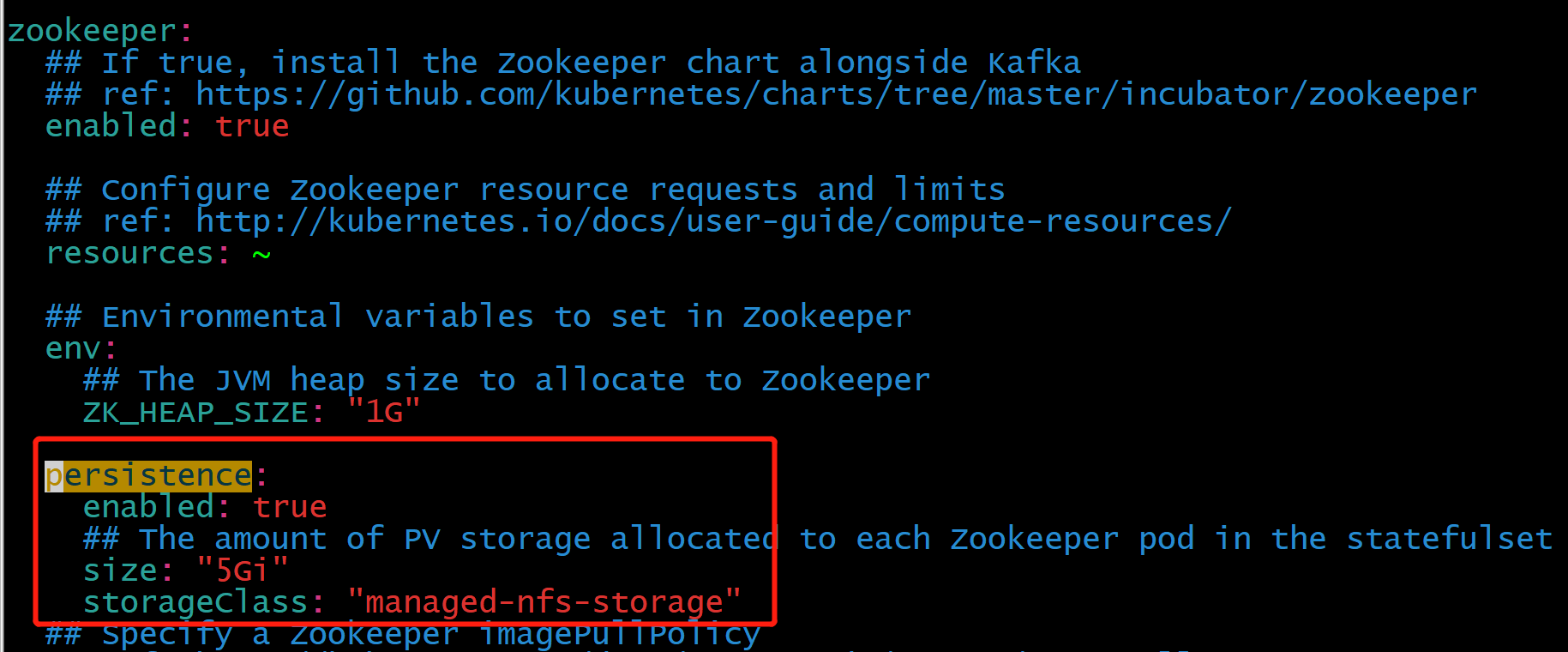

接下来设置数据卷,找到persistence,按需要调整大小,再设置已准备好的storageclass的名称

再设置zookeeper的数据卷

修改完成后就可以部署kafka Zookeeoer集群

[root@k8s01 kafka]# helm install --name-template kafka -f values.yaml . --namespace test-env

#等待几分钟集群初始化后查看pod

[root@k8s01 kafka]# kubectl get -n test-env po

NAME READY STATUS RESTARTS AGE

kafka-0 1/1 Running 0 5h10m

kafka-1 1/1 Running 0 5h9m

kafka-2 1/1 Running 0 5h8m

kafka-zookeeper-0 1/1 Running 0 5h11m

kafka-zookeeper-1 1/1 Running 0 5h10m

kafka-zookeeper-2 1/1 Running 0 5h10m

[root@k8s01 kafka]# kubectl get -n test-env svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kafka ClusterIP 10.111.207.146 <none> 9092/TCP 5h18m

kafka-0-external NodePort 10.97.107.169 <none> 19092:31090/TCP 5h18m

kafka-1-external NodePort 10.104.26.180 <none> 19092:31091/TCP 5h18m

kafka-2-external NodePort 10.99.233.249 <none> 19092:31092/TCP 5h18m

kafka-headless ClusterIP None <none> 9092/TCP 5h18m

kafka-zookeeper ClusterIP 10.109.8.126 <none> 2181/TCP 5h18m

kafka-zookeeper-headless ClusterIP None <none> 2181/TCP,3888/TCP,2888/TCP 5h18m

部署logstash

首先部署logstah配置文件

[root@k8s01 config]# cat config/containers.conf

input {

kafka {

bootstrap_servers => "192.168.50.205:31090,192.168.50.205:31091,192.168.50.205:31092"

topics => "axhome"

codec => json

}

}

output {

elasticsearch {

hosts => ["http://192.168.50.205:30023"]

index => "%{[fields][log_source]}-%{+YYYY-MM}"

}

}

[root@k8s01 elk]# kubectl create cm logstash-conf --from-file=containers.conf -n test-env

configmap/logstash-conf created

然后部署logstah

[root@k8s01 elk]# vim logstash.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: logstash

namespace: test-env

spec:

replicas: 1

selector:

matchLabels:

app: logstash

template:

metadata:

labels:

app: logstash

spec:

containers:

- name: logstash

image: elastic/logstash:7.3.1

volumeMounts:

- name: config

mountPath: /opt/logstash/config/containers.conf

subPath: containers.conf

command:

- "/bin/sh"

- "-c"

- "/opt/logstash/bin/logstash -f /opt/logstash/config/containers.conf"

volumes:

- name: config

configMap:

name: logstash-conf

---

apiVersion: v1

kind: Service

metadata:

labels:

app: logstash

name: logstash

namespace: test-env

spec:

ports:

- port: 8080

targetPort: 8080

selector:

app: logstash

type: ClusterIP

[root@k8s01 elk]# kubectl apply -f logstash.yaml

deployment.extensions/logstash created

service/logstash created

[root@k8s01 elk]# kubectl get -n test-env po|grep logstash

logstash-679cb9c998-hpt6w 1/1 Running 1 25s

部署es集群

[root@k8s01 elk]# vim es-statefulset.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: es

namespace: test-env

spec:

serviceName: elasticsearch

replicas: 3

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

initContainers:

- name: increase-vm-max-map

image: busybox

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

- name: increase-fd-ulimit

image: busybox

command: ["sh", "-c", "ulimit -n 65536"]

securityContext:

privileged: true

containers:

- name: elasticsearch

image: docker.elastic.co/elasticsearch/elasticsearch:7.6.2

ports:

- name: rest

containerPort: 9200

- name: inter

containerPort: 9300

resources:

limits:

cpu: 1000m

requests:

cpu: 1000m

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

env:

- name: cluster.name

value: k8s-logs

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: cluster.initial_master_nodes

value: "es-0,es-1,es-2"

- name: discovery.zen.minimum_master_nodes

value: "2"

- name: discovery.seed_hosts

value: "elasticsearch"

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx512m"

- name: network.host

value: "0.0.0.0"

volumeClaimTemplates:

- metadata:

name: data

labels:

app: elasticsearch

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: managed-nfs-storage

resources:

requests:

storage: 50Gi

---

kind: Service

apiVersion: v1

metadata:

name: elasticsearch

namespace: test-env

labels:

app: elasticsearch

spec:

selector:

app: elasticsearch

clusterIP: None

ports:

- port: 9200

name: rest

- port: 9300

name: inter-node

---

kind: Service

apiVersion: v1

metadata:

name: elasticsearch-client

namespace: test-env

labels:

app: elasticsearch

spec:

selector:

app: elasticsearch

ports:

- port: 9200

name: rest

nodePort: 30023

- port: 9300

name: inter-node

nodePort: 30024

type: NodePort

[root@k8s01 elk]# kubectl apply -f es-statefulset.yaml

statefulset.apps/es created

service/elasticsearch created

service/elasticsearch-client created

部署kibana

部署kibana配置文件

[root@k8s01 elk]# cat config/kibana.yml

server.port: 5601

server.host: 0.0.0.0

elasticsearch.hosts: ["http://192.168.50.205:30023:"]

kibana.index: ".kibana"

i18n.locale: "zh-CN"

[root@k8s01 elk]# kubectl create cm kibana-conf --from-file=config/kibana.yml -n test-env

configmap/kibana-conf created

部署kibana

[root@k8s-master elk]# vim kibana.yaml

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: test-env

labels:

app: kibana

spec:

ports:

- port: 5601

nodePort: 30561

type: NodePort

selector:

app: kibana

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

namespace: test-env

labels:

app: kibana

spec:

selector:

matchLabels:

app: kibana

template:

metadata:

labels:

app: kibana

spec:

containers:

- name: kibana

image: docker.elastic.co/kibana/kibana:7.6.2

env:

- name: ELASTICSEARCH_HOSTS

value: http://192.168.50.205:30023

ports:

- containerPort: 5601

volumeMounts:

- name: config

mountPath: /usr/share/kibana/config/kibana.yml

subPath: kibana.yml

volumes:

- name: config

configMap:

name: kibana-conf

[root@k8s01 elk]# kubectl apply -f kibana.yaml

service/kibana created

deployment.apps/kibana created

[root@k8s01 elk]# kubectl get -n test-env po|grep kibana

kibana-5565486fd4-4887b 1/1 Running 0 2m28s

打开kibana,查看并添加索引,部署完成

版权声明:

本站所有文章除特别声明外,均采用 CC BY-NC-SA 4.0 许可协议。转载请注明来自

爱吃可爱多!

喜欢就支持一下吧

打赏

微信

微信

支付宝

支付宝

微信

微信

支付宝

支付宝